- The Ethical Imperative of Responsibility in AI Innovation

- Transparency and Accountability in AI Development

- Fairness and Diversity in AI Algorithms

- Human-Centric Design and Decision-Making

- Regulatory Frameworks and Governance Mechanisms

In the realm of rapidly advancing technology, particularly in artificial intelligence (AI) innovation, the concept of responsibility plays a pivotal role in shaping the future landscape. As AI applications continue to permeate various aspects of our lives, it becomes essential to establish guidelines that prioritize ethical considerations, transparency, and accountability. By adhering to these fundamental principles, the industry can harness the transformative power of AI innovation while mitigating potential risks and societal implications.

The Ethical Imperative of Responsibility in AI Innovation

Artificial intelligence has the potential to revolutionize industries, enhance efficiency, and drive innovation to unprecedented heights. However, this transformative power must be wielded responsibly to ensure that AI technologies do not perpetuate biases, violate privacy rights, or undermine fundamental human values. Ethical considerations should form the cornerstone of AI development, guiding researchers, developers, and policymakers in making informed decisions that prioritize the well-being of individuals and society as a whole.

Transparency and Accountability in AI Development

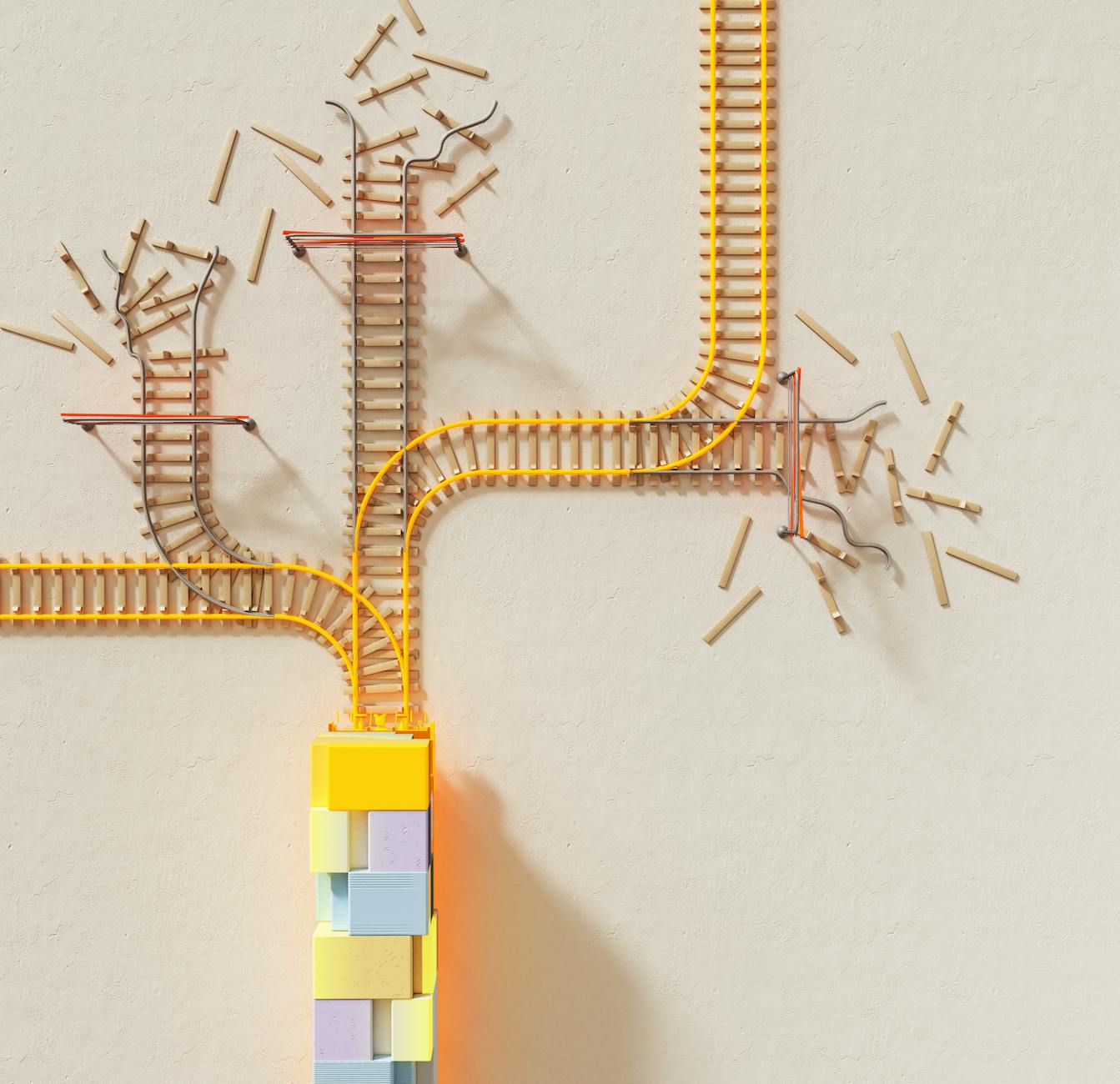

Transparency is a crucial aspect of responsible AI innovation, as it fosters trust among stakeholders and enables scrutiny of algorithms and decision-making processes. Developers should strive to ensure that AI systems are explainable and interpretable, allowing users to understand how decisions are made and enabling recourse in case of errors or unintended consequences. By establishing clear lines of accountability, organizations can uphold ethical standards and address concerns related to bias, discrimination, and algorithmic fairness.

Fairness and Diversity in AI Algorithms

Fairness in AI algorithms is another critical consideration that underscores the importance of inclusivity and diversity in data collection, model training, and system evaluation. Biases inherent in training data can propagate through AI systems, leading to discriminatory outcomes that perpetuate social inequalities. To address this challenge, developers must implement strategies to detect and mitigate biases, promote diversity in teams working on AI projects, and engage with diverse stakeholders to ensure that AI technologies are inclusive and equitable.

Human-Centric Design and Decision-Making

Human-centric design principles emphasize the importance of user well-being, autonomy, and decision-making agency in the development and deployment of AI systems. Designing AI technologies with a focus on user needs and preferences can enhance usability, foster trust, and empower individuals to make informed choices. Incorporating ethical frameworks such as privacy by design, consent management, and user feedback mechanisms can help prioritize human values and preferences in the design and implementation of AI-driven solutions.

Regulatory Frameworks and Governance Mechanisms

Regulatory frameworks and governance mechanisms play a crucial role in ensuring that AI innovation aligns with ethical standards, legal requirements, and societal expectations. Policymakers, industry stakeholders, and civil society organizations need to collaborate to develop robust regulations that govern the responsible use of AI technologies, safeguard individual rights, and promote accountability. Establishing oversight bodies, ethical review boards, and mechanisms for auditing and certification can enhance transparency, compliance, and oversight in the AI ecosystem.

In conclusion, responsibility in AI innovation is not just a moral imperative but a strategic necessity for building a sustainable and inclusive future. By adhering to ethical guidelines, promoting transparency, fairness, and diversity, and prioritizing human-centric design principles, the AI industry can harness the potential of technology for the benefit of society. Embracing responsibility in AI innovation requires collective effort, continuous learning, and a commitment to upholding ethical values in the pursuit of technological advancement. Only by integrating responsibility into the fabric of AI development can we ensure that innovation serves humanity’s best interests and contributes to a more equitable and resilient future.